REQUEST COMPLIMENTARY SQLS*PLUS LICENCE

Improve MongoDB aggregation structure

Preamble

MongoDB recently introduced its new aggregation structure. This structure provides a simpler solution for calculating aggregated values rather than relying on powerful structures with a reduced map.

With just a few simple primitives, it allows you to calculate, group, shape, and design documents that are contained in a particular MongoDB collection. The remainder of this article describes the refactoring of the map reduction algorithm for optimal use of the new MongoDB aggregation platform. The complete source code can be found in the publicly available Datablend GitHub repository.

1. MongoDB aggregation structure

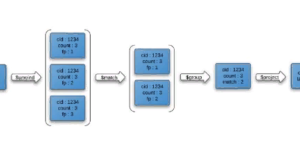

The MongoDB aggregation platform is based on the well-known Linux Pipeline concept where the output of one command is transmitted through a conveyor or redirected to be used as input for the next command. In the case of MongoDB several operators are combined into a single conveyor that is responsible for processing the document flow.

Some operators such as $ match, $ limit and $ skip accepting the document as input and output the same document if a certain set of criteria is met. Other operators, such as $ project and $ unwind, accept a single document as input data and change its format or form several documents based on a certain projection.

The $ group operator finally accepts several documents as input data and groups them into one document by combining the corresponding values. Expressions may be used in some of these operators to calculate new values or perform string operations.

Several operators are combined into a single pipeline, which applies to the list of documents. The conveyor itself is executed as the MongoDB Command, which results in a single MongoDB document, which contains an array of all documents that came out at the end of the conveyor. The next paragraph describes in detail the refactoring algorithm of molecular similarity as a conveyor of operators. Be sure to (re)read the previous two articles to fully understand the implementation logic.

2. Piping of molecular similarity

When applying a conveyor to a particular collection, all documents contained in that collection are passed on as input to the first operator. It is recommended that you filter this list as quickly as possible to limit the number of documents that are transferred via the pipeline. In our case, this means filtering the entire document that will never meet the target Tanimoto factor.

Therefore, as a first step, we compare all documents for which the number of fingerprints is within a certain threshold. If we target a Tanimoto factor of 0.8 with a target connection containing 40 unique fingerprints, the $ match operator will look like this:

{"$match" :

{ "fingerprint_count" : {"$gte" : 32, "$lte" : 50}}.

}

Only connections with a number of fingerprints from 32 to 50 will be transferred to the next pipeline operator. To perform this filtering, the $ match operator can use the index that we defined for the fingerprint_count property. To calculate the Tanimoto coefficient we need to calculate the number of common fingerprints between a certain input connection and the target connection we are targeting.

To work at the fingerprint level, we use the $ unwind operator. $ unwind removes the array elements one by one, returning the document stream in which the specified array is replaced by one of its elements. In our case, we apply $ unwind to fingerprints. Consequently, each composite document will result in n composite documents, where n is the number of unique fingerprints contained in a composite document.

{"$unwind" :"$fingerprints"}

To calculate the number of common fingerprints, we will start by filtering all documents that do not have the fingerprints that are on the fingerprint list of the target connection. To do this, we use the $ match operator again, this time filtering the fingerprint property, where only documents that contain a fingerprint that is in the target fingerprint list are supported.

{"$match" :

{ "fingerprints" :

{"$in" : [ 1960 , 15111 , 5186 , 5371 , 756 , 1015 , 1018 , 338 , 325 , 776 , 3900 , ..., 2473] }

}

}

Since we only match the fingerprints that are in the target fingerprint list, the output can be used to calculate the total number of common fingerprints.

To do this we apply the $ group operator to the composite connection, although we create a new type of document containing the number of matching fingerprints (by adding up the number of occurrences), the total number of input connection fingerprints, and smileys.

{"$group" :

{ "_id" : "$compound_cid". ,

"fingerprintmatches" : {"$sum" : 1} ,

"totalcount" : { "$first" : "$fingerprint_count"} ,

"smiles" : {"$first" : "$smiles"}

}

}

Now we have all parameters to calculate the Tanimoto coefficient. To do this, we will use the $ project operator which, in addition to copying the id and smiles composite property, also adds a newly calculated property named Tanimoto.

{

"$project"

:

{

"_id"

:

1

,

"tanimoto"

:

{

"$divide"

:

[

"$fingerprintmatches."

,

{

"$subtract"

:

[

{

"$add"

:

[

40

,

"$totalcount"

]

}

,

"$fingerprintmatches."

]

}

]

}

,

"smiles"

:

1

}

}

Since we are only interested in connections that have a Tanimoto target coefficient of 0.8, we use the optional $ match operator to filter out all those that don’t reach this coefficient.

{"$match" :

{ "tanimoto" : { "$gte" : 0.8}

}

The command of the complete pipeline can be found below.

{"aggregate" : "compounds"} ,

"pipeline" : [

{"$match" :

{ "fingerprint_count" : {"$gte" : 32, "$lte" : 50} }

},

{"$unwind" : "$fingerprints"},

{"$match" :

{ "fingerprints" :

{"$in" : [ 1960 , 15111 , 5186 , 5371 , 756 , 1015 , 1018 , 338 , 325 , 776 , 3900,... , 2473] }

}

},

{"$group" :

{ "_id" : "$compound_cid" ,

"fingerprintmatches" : {"$sum" : 1} ,

"totalcount" : { "$first" : "$fingerprint_count"} ,

"smiles" : {"$first" : "$smiles"}

}

},

{"$project" :

{ "_id" : 1 ,

"tanimoto" : {"$divide" : ["$fingerprintmatches"] , { "$subtract" : [ { "$add" : [ 89 , "$totalcount"]} , "$fingerprintmatches"] }. ] } ,

"smiles" : 1

}

},

{"$match" :

{"tanimoto" : {"$gte" : 0.05} }

} ]

}

The output of this pipeline contains a list of connections that have Tanimoto 0.8 or higher in relation to a particular target connection. A visual representation of this conveyor can be found below:

3. Conclusion

The new MongoDB aggregation structure provides a set of easy-to-use operators that allow users to express algorithms of card reduction type more briefly. The concept of a conveyor below it offers an intuitive way to process data.

Not surprisingly, this pipeline paradigm is adopted by various NoSQL approaches, including Gremlin Framework Tinkerpop in the implementation and Cypher Neo4j in the implementation.

In terms of performance, the piping solution is a significant improvement in the implementation of the reduction maps.

Operators are initially supported by the MongoDB platform, which leads to significant performance improvements over the interpreted Javascript. Since the Aggregation Framework can also operate in an isolated environment, it easily exceeds the performance of my original implementation, especially when the number of input connections is high and the Tanimoto target is low. Excellent performance from the MongoDB command!

Aggregation | MongoDB | Tutorial

Enteros

About Enteros

Enteros offers a patented database performance management SaaS platform. It proactively identifies root causes of complex business-impacting database scalability and performance issues across a growing number of RDBMS, NoSQL, and machine learning database platforms.

MORE NEWS

PreambleNoSql is not a replacement for SQL databases but is a valid alternative for many situations where standard SQL is not the best approach for...

PreambleMongoDB Conditional operators specify a condition to which the value of the document field shall correspond.Comparison Query Operators $eq...

5 Database management trends impacting database administrationIn the realm of database management systems, moreover half (52%) of your competitors feel...

The data type is defined as the type of data that any column or variable can store in MS SQL Server. What is the data type? When you create any table or...

PreambleMS SQL Server is a client-server architecture. MS SQL Server process starts with the client application sending a query.SQL Server accepts,...

First the basics: what is the master/slave?One database server (“master”) responds and can do anything. A lot of other database servers store copies of all...

PreambleAtom Hopper (based on Apache Abdera) for those who may not know is an open-source project sponsored by Rackspace. Today we will figure out how to...

FlexibilityOne of the most advertised features of MongoDB is its flexibility. Flexibility, however, is a double-edged sword. More flexibility means more...

PreambleSQLShell is a cross-platform command-line tool for SQL, similar to psql for PostgreSQL or MySQL command-line tool for MySQL.Why use it?If you...

PreambleWriting an application on top of the framework on top of the driver on top of the database is a bit like a game on the phone: you say “insert...

PreambleOracle Coherence is a distributed cache that is functionally comparable with Memcached. In addition to the basic function of the API cache, it...

PreambleIBM pureXML, a proprietary XML database built on a relational mechanism (designed for puns) that offers both relational ( SQL / XML ) and...

What is PostgreSQL array? In PostgreSQL we can define a column as an array of valid data types. The data type can be built-in, custom or enumerated....

PreambleIf you are a Linux sysadmin or developer, there comes a time when you need to manage an Oracle database that can work in your environment.In this...

PreambleStarting with Microsoft SQL Server 2008, by default, the group of local administrators is no longer added to SQL Server administrators during the...

PreambleWhen administering PostgreSQL database servers, one of the most common tasks you will probably perform is enumerating databases and their tables....